Mentors

- Chandravaran Kunjeti

- Sai Kumar Dande

Members

- Soma Anil Kumar

- V Kartikeya

Abstract

3D mapping is a very useful technology in present days. One of the best benefits of 3D mapping is that it provides the latest technical methods for visualization and gathering information. Knowledge visualization and science mapping become easier when a 3D map is available for the area under study. In this project we want to implement a robot which can map a 3D model of a room or an area and simultaneously avoid mapping humans or any other animal. We have implemented our code from scratch and have optimized the code to give us better features and faster computational time.

Theory

There are various ways which can be used to find the depth of an object like LIDAR, InfraRed, Stereo matching etc. In this project we use the stereo based approach to calculate the depth of an object. Stereo matching aims to identify the corresponding points and retrieve their displacement to reconstruct the geometry of the scene as a depth map.

Let us go through the different topics needed for stereo vision one by one

Stereo camera calibration

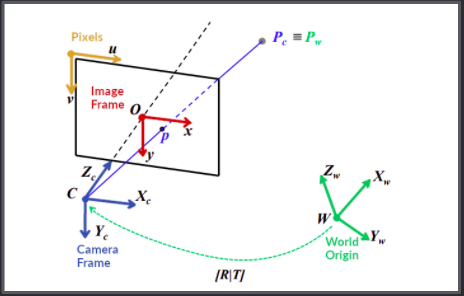

If we want understand the point in an image we need to know what transforms are involved in the process, there are many types of parameters which are as follows:

Extrinsic parameters

- Rotation matrix

- Translation matrix

Intrinsic parameters

- Intrinsic matrix

- Focal length

- Optical centre

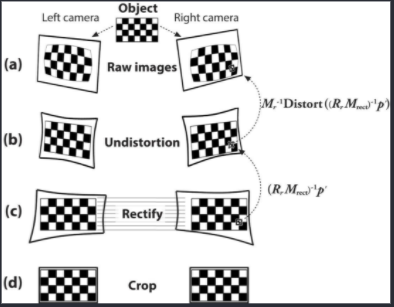

Stereo camera calibration info is used for rectification. This rectification process removes lens distortion, and turns the stereo pair into standard form where images are perfectly aligned horizontally. After rectifiying both the left and right images of the stereo camera, calculating the disparity map is a simple search for a pixel in the left image along the corresponding horizontal epipolar line in the right image. There are various methods that are used for pixel matching which will be discussed in the later sections.

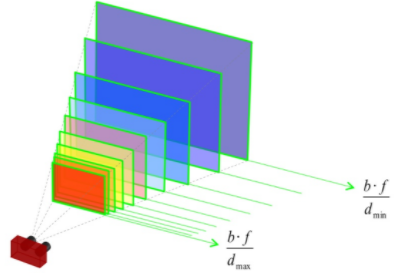

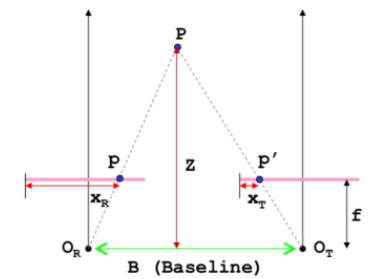

Disparity

After the images are rectified, the disparity between the left and right images is found. Disparity refers to the distance between two corresponding points in the left and right image of a stereo pair. Disparity between the left and right image occurs because the cameras are placed with a certain amount of distance between them. This disparity is more for the objects that are closer to the camera than the objects which are farther. Using this principle, we can find the depth map or the disparity map of the images.

Depth = Base_length*Focal_length/Disparity

where

Base_length = Distance between left and right camera

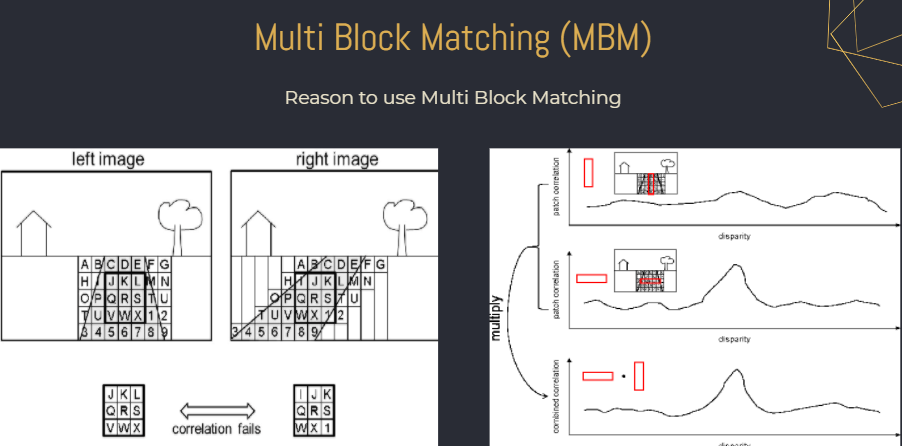

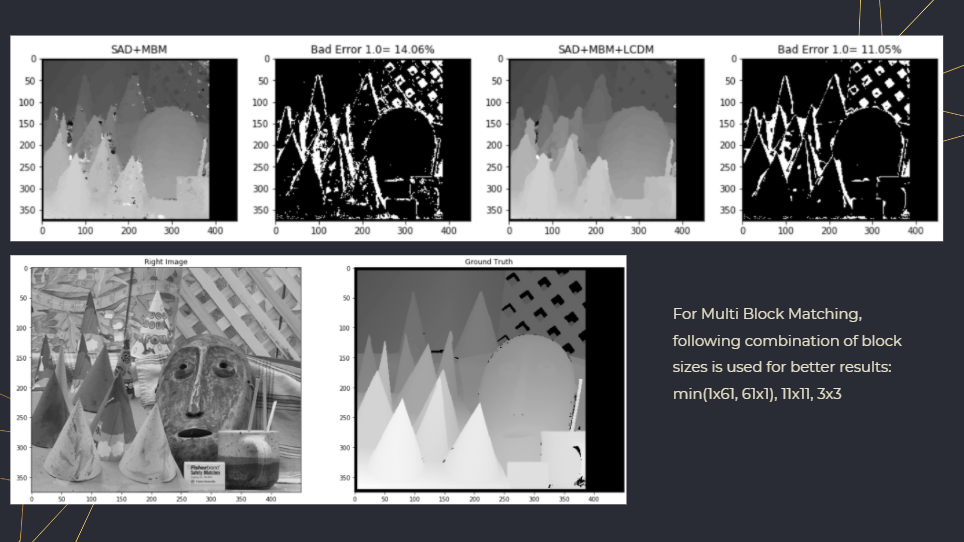

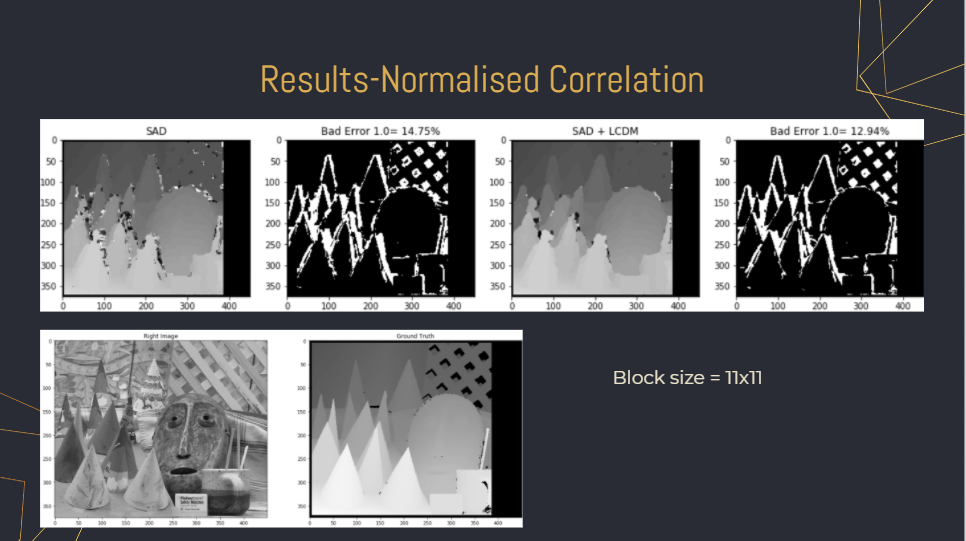

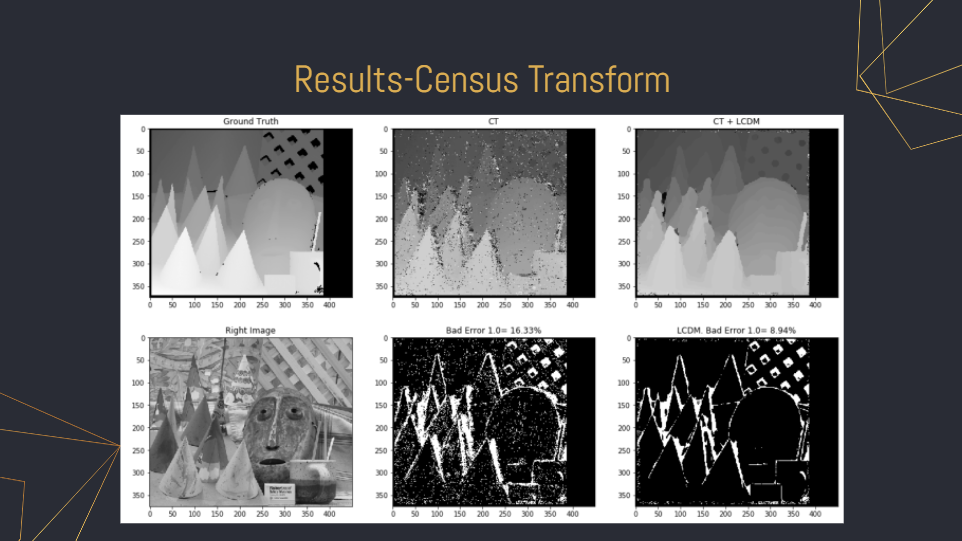

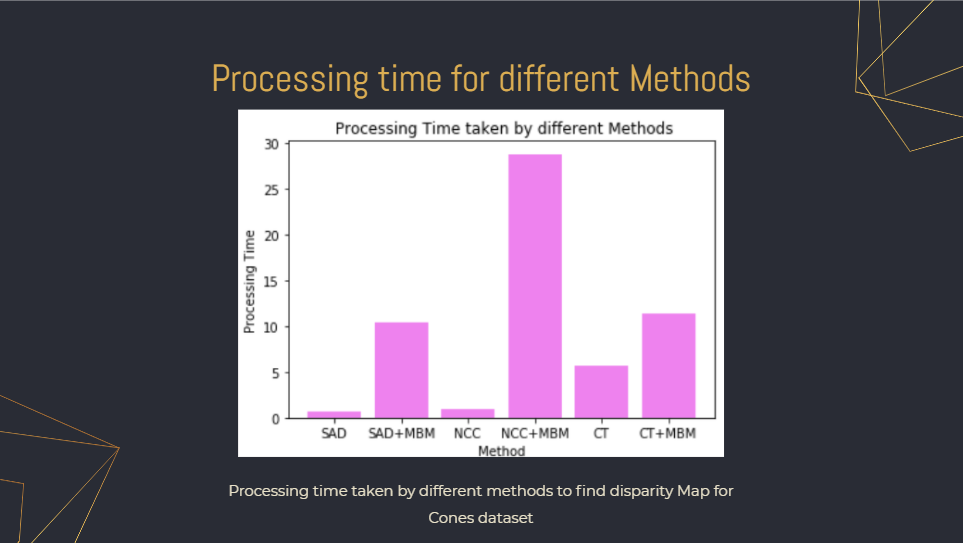

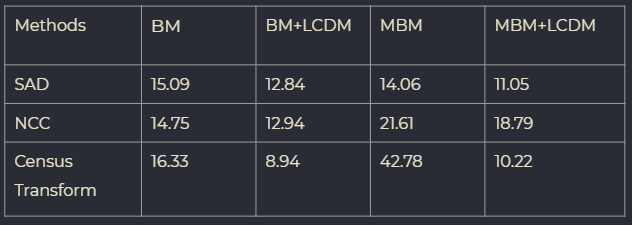

Disparity map is found through Multiple Block Matching.

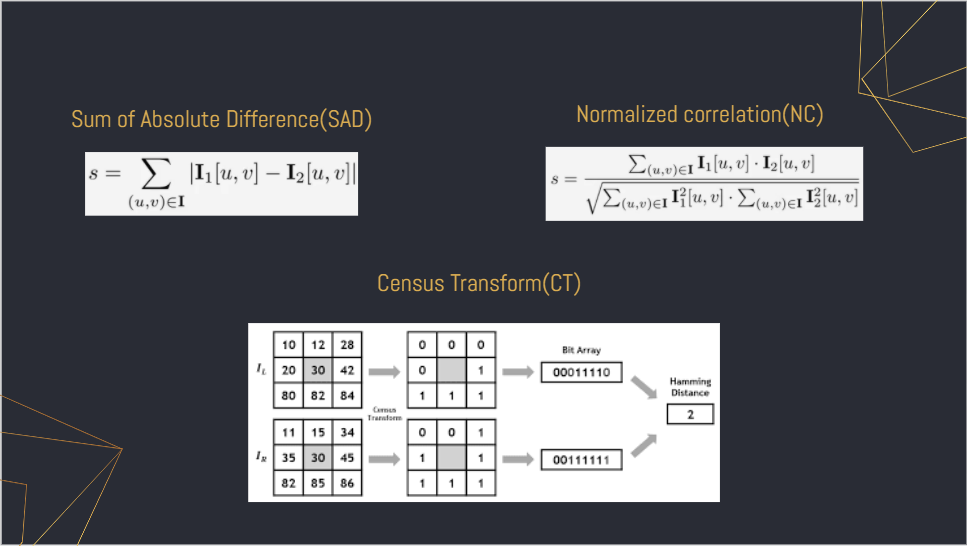

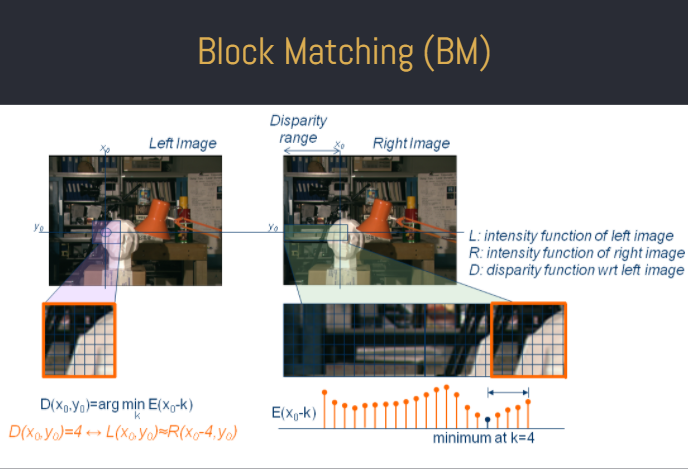

Block matching is where we use a block of pixels for mathcing, instead of trying to find a single pixel in the right image. Here we take a certain number of pixels surrounding the pixel in the left image and try to find a closest match for the block of pixels in the right image. After finding the right match, the disparity is calculated by using the formula XL-XR, where XL is the horizontal distance of the pixel in the left image and XR is the match that is found in the right image.

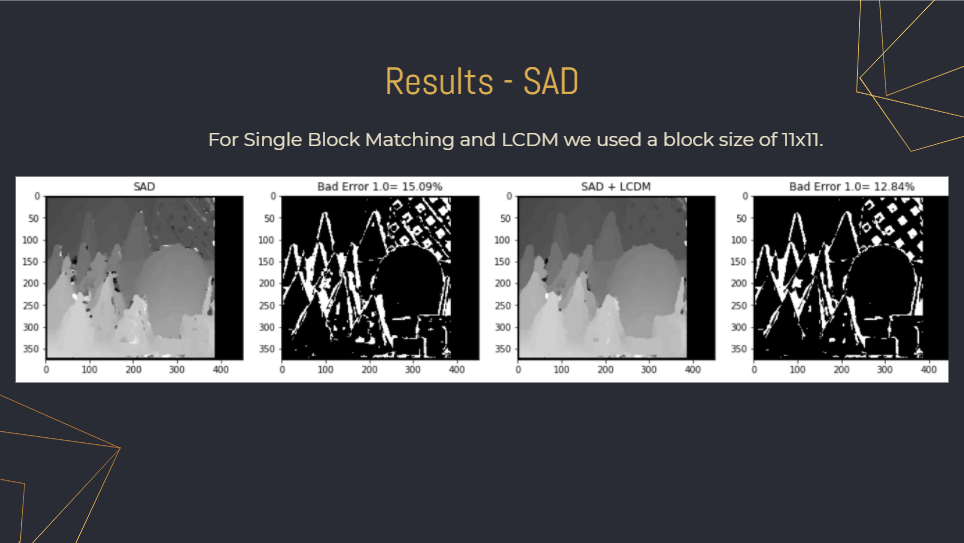

# Results obtained

Visualisation of the depth map using point cloud.

Result obtained (Left), Ground truth (Right) For visualisation we useed open3d to get an understanding how well the algorithm was performing when compared to the ground truth, and it can be seen that the performance was good.

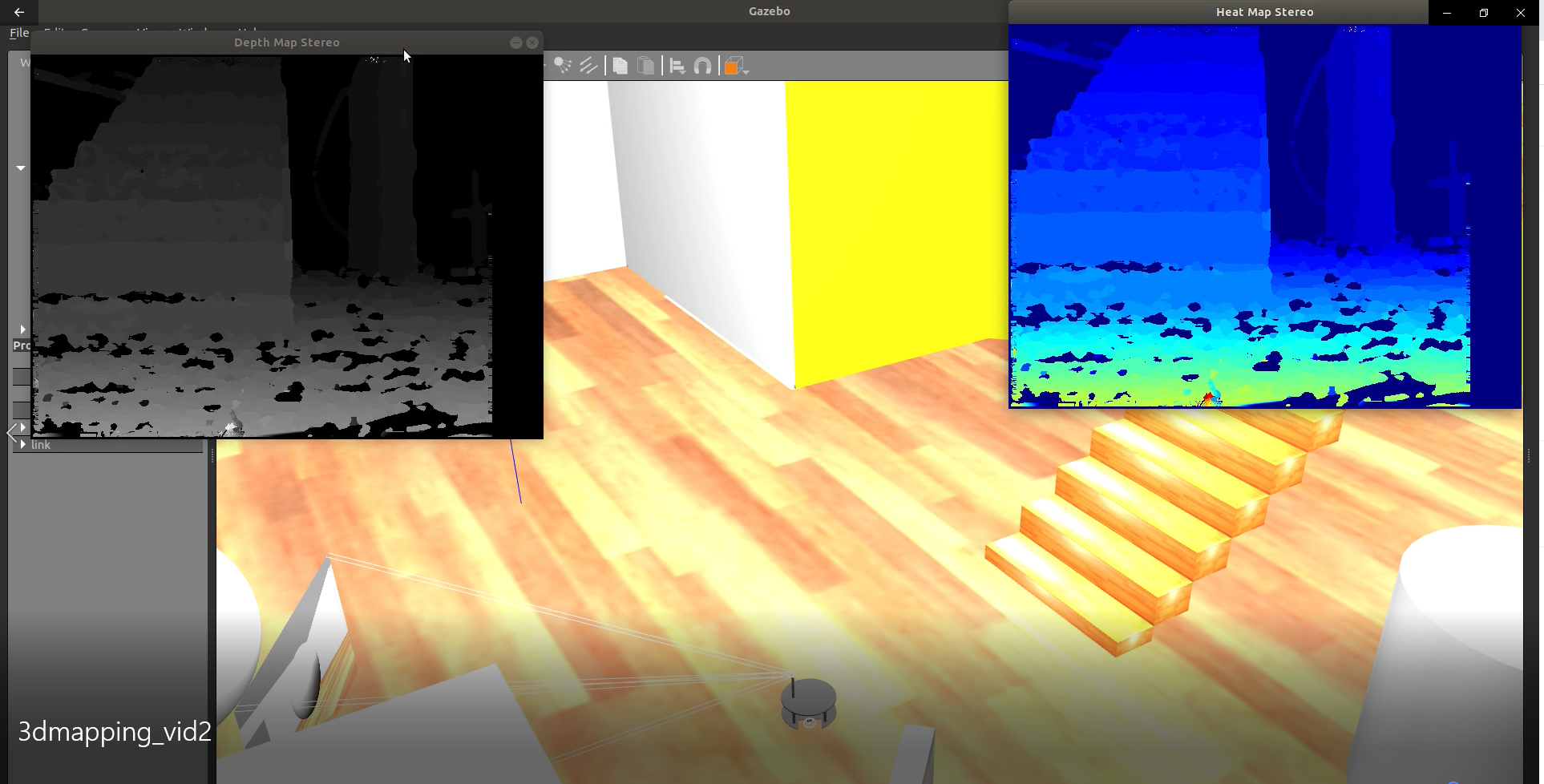

Video of the robot moving around, taking images from the stereo camera and finding depth map

Due to the pandemic we choose to test our algorithm in a simulator called Gazebo, the whole robot was designed by us for this specific task. Due to the high computation time we only took pictures at different instances and found the depth map, we are working on making the algorithm faster!!

Thank You!!!

For the code of the Ros package used for this project please go to the following GitHub link. For the code of the various algorithms used for this project please go to the following GitHub link.

References

-

L. Ma, J. Li, J. Ma and H. Zhang, “A Modified Census Transform Based on the Neighborhood Information for Stereo Matching Algorithm,” 2013 Seventh International Conference on Image and Graphics, Qingdao, China, 2013, pp. 533-538, doi: 10.1109/ICIG.2013.113.

-

N. Einecke and J. Eggert, “A multi-block-matching approach for stereo,” 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea (South), 2015, pp. 585-592, doi: 10.1109/IVS.2015.7225748.

-

Yang, Q., Yang, R., Davis, J., & Nister, D. (2007). Spatial-Depth Super Resolution for Range Images. 2007 IEEE Conference on Computer Vision and Pattern Recognition. doi:10.1109/cvpr.2007.383211