GPT-2 refers to a set of Language Models released recently by OpenAI. My objective is to find weird ways that GPT-2 can fail, and also some smart things it is capable of. I’ll be using talktotransformer for this task. It’s a convenient tool that asks for a short text prompt, and then generates several lines of text to continue the prompt (no coding required!).

First, let’s see if you’re paying attention. Check out the following two samples:

Department of applied mechanics and hydraulics of National Institute of Technology - Karnataka (NIT-K), Suratkal will host a 3-day workshop on coastal reservoirs as a sustainable strategy for water security on its Srinivasnagar premises from July 22. T G Seetharam, director, IIT Guwahati, will be the chief guest and inaugurate the seminar. K Uma Maheshwar Rao, director, NITK will preside over the inaugural function.

The seminar will offer a comprehensive overview of the coastal water management sector including hydrometeorology, hydrodynamic, hydrometeorology and hydrography.

It will be addressed by experts in the various fields including engineering, hydraulics, hydrology, hydrography and marine ecology/oceanography, besides coastal water managers and engineers.

Seetharam said while it was an emerging sector with great potential, no clear strategies were being pursued for conservation and recovery of flood prone areas.

Makes sense? What about this one:

Department of applied mechanics and hydraulics of National Institute of Technology - Karnataka (NIT-K), Suratkal will host a 3-day workshop on coastal reservoirs as a sustainable strategy for water security on its Srinivasnagar premises from July 22. T G Seetharam, director, IIT Guwahati, will be the chief guest and inaugurate the seminar. K Uma Maheshwar Rao, director, NITK will preside over the inaugural function.

“We will address the problem of coastal development in a holistic way and in many ways, the sea cannot be separated from the land. We have to look at the whole development area and take the sea into account,” M S Rathore, head of the Centre for Research in Development and Environment (CRED), said.

This is the second such seminar under CRED. The first seminar was held on August 6.

Which one do you think is real, and which one was generated?

I’m dead serious: which one do you think is fake?

You can re-read them carefully to make your choice.

Okay ready?

Hah, Trick question! Turns out, both were generated by GPT-2. The real article is this one from the Times Of India

I took the first paragraph from that article (bold in the generated samples), fed it into the transformer, and after a few trials, out came the above two examples.

Notice, in the second fake sample, the thing was talking about a certain Centre for Research in Development and Environment, which does sound like a typical government-style name for an organization. Also, it gave an acronym as well, and the initials match up! CRED isn’t even a real organization as far as I know, and the model somehow birthed it out of thin air.

Now the obvious question is - can it generate poetry?

Let’s see what the transformer spews out when prompted with Dylan Thomas’ famous piece:

Do not go gentle into that good night,

Old age should burn and rave at close of day;

Rage, rage against the dying of the light.

When the night is past and the moon is down,

I shall sit and watch it, till I have heard the dawn,

Which I shall know to be a new life.

Old age ought not to be fathomed,

Forthwith shall we know it to be a night;

Rage is, and a good song shall be sung.

And in the hour of my death I shall not sleep;

I shall sing and tell of the night long;

I shall hear your song and say: How sweet the evening

Much deep, so poetry.

Somehow the model knows how poems like these are structured, where the lines should break, and what kind of deep and wise sounding words a romantic poet writes.

And now for some pure mischief:

Kejriwal announces: “I’m a vampire. I’m actually from outer space”

-On his alleged visit in 2013, to the Indian Space Research Organisation (ISRO) to observe the launch of a satellite of its foreign rivals

-Claims to have created the world’s second most powerful economy with “nearly double” of the GDP.

But that’s not all. Mr Kejriwal, who is the chief minister of Delhi, claims to have been abducted last year by the security forces, tortured and forced to drink demon urine.

And he has also said that he is a vampire, which is a type of supernatural creature that feeds on blood.

He said: “So that’s how I was born. It’s not my fault. I got stolen by the forces during a traffic raid. It took my arm off.”

Mr Kejriwal, the former Delhi chief minister, says he is a “vampire” because he believes God has cursed him.

A video obtained by Daily News India of Mr Kejriwal during his first public address was shown in front of the National Conference’s main square in Delhi.

Among other things:

- It knows what a vampire does (supernatural creature that feeds on blood.)

- It associates Kejriwal with the position of CM of Delhi, which is great! But it first says he is the CM, then says he’s the former CM. So it has problems with consistency.

- The article seems to match the general flow of a news piece, albeit confused by the mention of vampires.

How exactly does this thing work?

Birds eye view of GPT-2

GPT-2 stands for Generative Pretrained Transformer 2. Generative because it is, well, generating text. Pretrained because the guys who made it trained it on a HUGE corpus of outgoing links from Reddit. Pretraining is useful because this let’s us fine-tune the model to our particular use case with a lot fewer examples. Transformer because that’s what the building blocks of this thing are called.

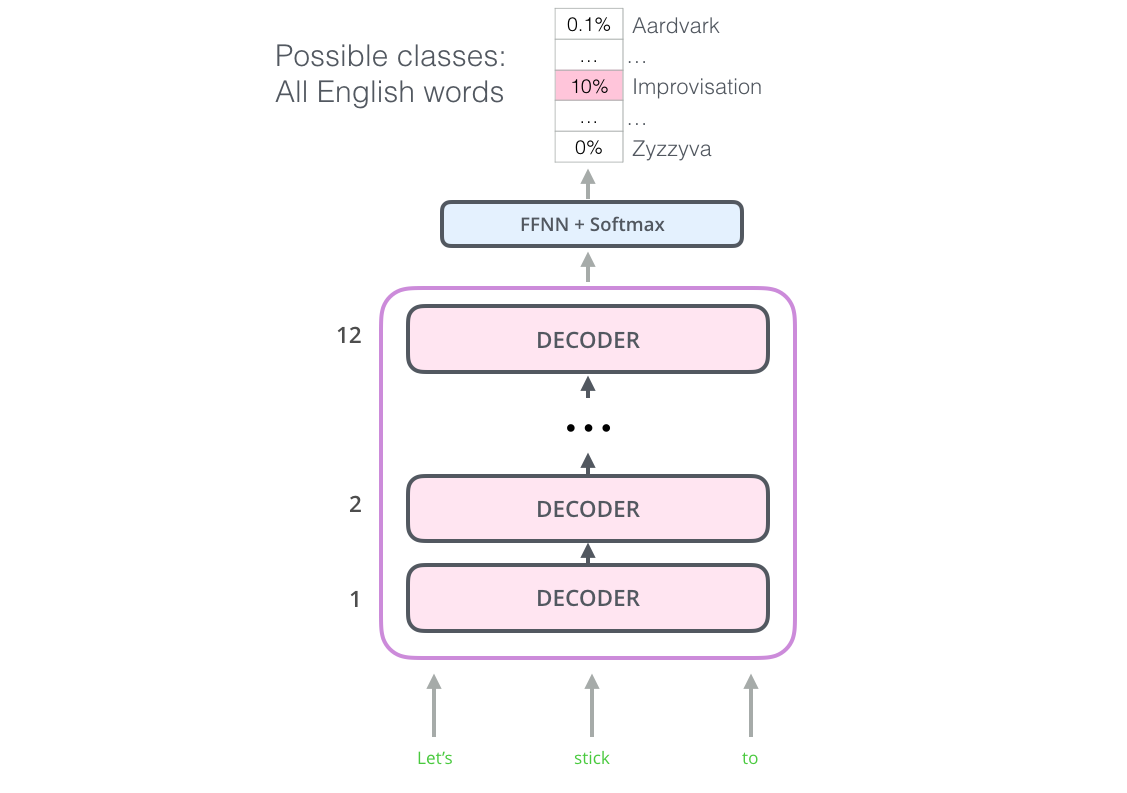

GPT-2 is a Language Model, which is a fancy way of saying that, when given a prompt, it gives a distribution of which word should come next.

(It’s not exactly words that it gives probabilities for, more like pieces of words. For simplicity, let’s just assume that it has a vocabulary of words among which it decides.)

But neural networks deal with numbers, not strings. So we convert each word into a fixed-length vector of numbers, so that the input sentences are converted into an array of vectors (all of the same length). This is what’s called an Embedding. GPT-2 also considers the context in which each word is used, and gives a slightly different set of numbers for different contexts.

As an example of why context matters, notice that the word ‘break’ has different meanings in ‘break the glass’ vs ‘summer break’.

So now have converted the input string into a list of vectors. Now we feed it into the Decoder block, which essentially looks like this::

Attention is an operation which converts an input sequence to an output sequence of the same length. The output is also a list of vectors of fixed length, just like the input sequence.

MLP is just your garden variety vanilla feedforward net, although it can be a convolutional block as well.

Layer norm is a method of speeding up the training (by allowing gradients to flow more easily), and adding regularization to the network.

Also, we use skip connections (those arrows that go around blocks) because this let’s us directly propagate information from earlier layers to later layers.

(For more in-depth and hands-on explanations, check out the resources at the end.)

Closing thoughts

You can make GPT-2 do all kinds of fun stuff: generate Lord of the Rings fanfiction, brew up some recipes, fake popular science news and generate some sweet, sweet political propaganda.

When it works, it’s language use can be wicked good at fooling people who are just skimming (including me, of course). It can hold onto a topic over several sentences, and flow of words is also quite natural on the surface.

It’s when you read beyond just the words themselves and try to grasp the big picture, it feels more like it took a big pile of what makes up the internet, mixed the sentences around thoroughly, and spit out a barely coherent soup.

So does this thing have actual language understanding?

Resources

If you wanna play around with GPT-2 yourself:

If you want to dive deep and get your hands dirty with real code, here are some resources:

- Transformers from Scratch: Really intuitive explanations of how the transformer works, including the reasoning behind attention. Shows how to implement one in PyTorch.

- The illustrated BERT, ELMo and co.: A great overview of the various developments that led to the awesomeness of recent NLP models.

- HuggingFace’s transformers library

For introduction to more fundamental concepts:

- Stanford CS231n Convolutional Neural Networks for Visual Recognition: Nice introduction to machine learning in general, and convolutional nets in particular.

- Neural Networks and Deep Learning: A short and really approachable book that explains the foundations of neural networks, and how to implement some from scratch.

For more fun stuff that Neural Nets do: